Large language models (LLMs) have transformed conversational platforms with their ability to comprehend prompts and generate coherent responses across multiple languages. Recent research conducted at UC San Diego aimed to determine whether these models, like GPT-4, are so advanced that they can be mistaken for human interaction. The study involved running a Turing test, a method devised by Alan Turing to evaluate the human-like intelligence of machines.

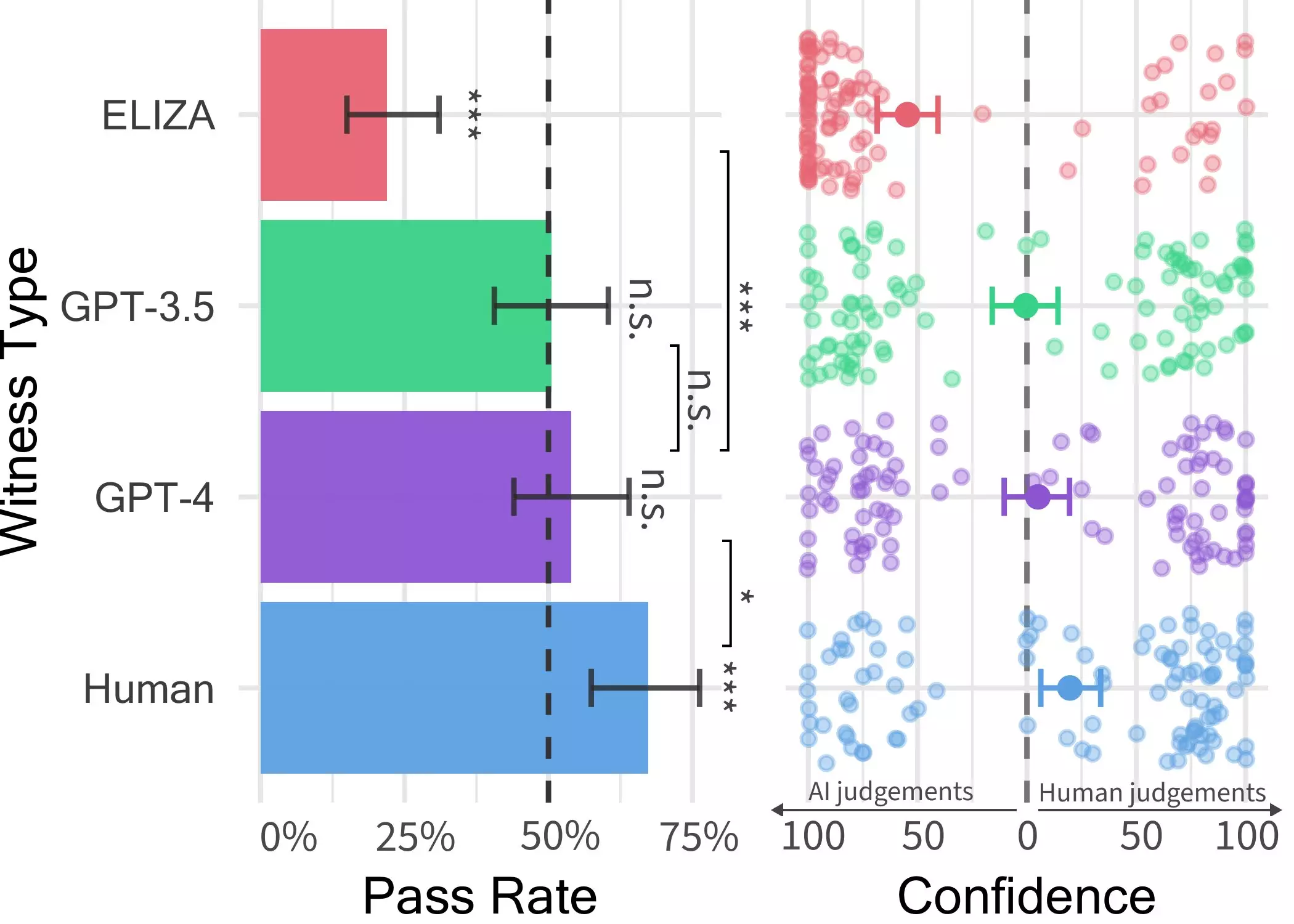

The initial findings of the experiment indicated that GPT-4 could successfully imitate human responses in approximately 50% of interactions. However, the researchers recognized the need to further refine their study to account for variables that could impact the results. This led to a subsequent experiment, which revealed fascinating insights into the capabilities of these language models.

Experiment Design and Findings

In the two-player game devised by Jones and his team, human participants engaged in conversations with either another human or an AI agent, serving as the “witness.” The participants interrogated the witness, asking a series of questions to determine whether they were interacting with a human or a machine. Surprisingly, while users could often identify ELIZA and GPT-3.5 as AI models, distinguishing GPT-4 proved to be as challenging as making a random guess.

Implications for Human-AI Interactions

The implications of these findings are profound, suggesting that in real-world scenarios, individuals may struggle to differentiate between conversing with a human or an AI system. This blurring of lines could lead to increased instances of deception, with potential consequences in areas such as client-facing roles, fraud, or dissemination of misinformation. As AI systems like GPT-4 continue to advance, the need for enhanced strategies to discern between human and machine interaction becomes increasingly critical.

The research outcomes underscore the pressing need to address the evolving challenges presented by LLMs in conversational platforms. As algorithms like GPT-4 achieve unprecedented levels of realism, individuals may face growing uncertainty when engaging in online interactions. To further elucidate these phenomena, the researchers plan to expand their study by introducing more complex scenarios, such as three-person interactions involving both humans and AI systems. These future endeavors aim to provide valuable insights into the evolving dynamics between humans and large language models.

The study conducted at UC San Diego sheds light on the remarkable advancements in large language models and their implications for human-machine interactions. The ability of models like GPT-4 to mimic human conversational patterns with striking fidelity underscores the need for continuous research and vigilance in navigating the evolving landscape of AI technology. As we venture into a future where the boundaries between human and machine interactions blur, understanding the capabilities and limitations of these models becomes imperative for fostering trust and transparency in digital communication.

Leave a Reply