In recent years, the development of deep neural networks (DNNs) has revolutionized various real-world applications. However, concerns have been raised about the fairness of these AI models, as some studies have revealed disparities in performance based on factors such as the training data and hardware platforms. Addressing this issue, researchers at the University of Notre Dame embarked on a study to investigate how hardware systems can contribute to the fairness of AI.

While extensive research has focused on algorithmic fairness, the role of hardware in influencing AI fairness has been largely overlooked. The study by Shi and his colleagues aimed to fill this gap by exploring how emerging hardware designs, particularly computing-in-memory (CiM) devices, affect the fairness of DNNs. Their experiments revealed that hardware plays a crucial role in determining the fairness of AI models.

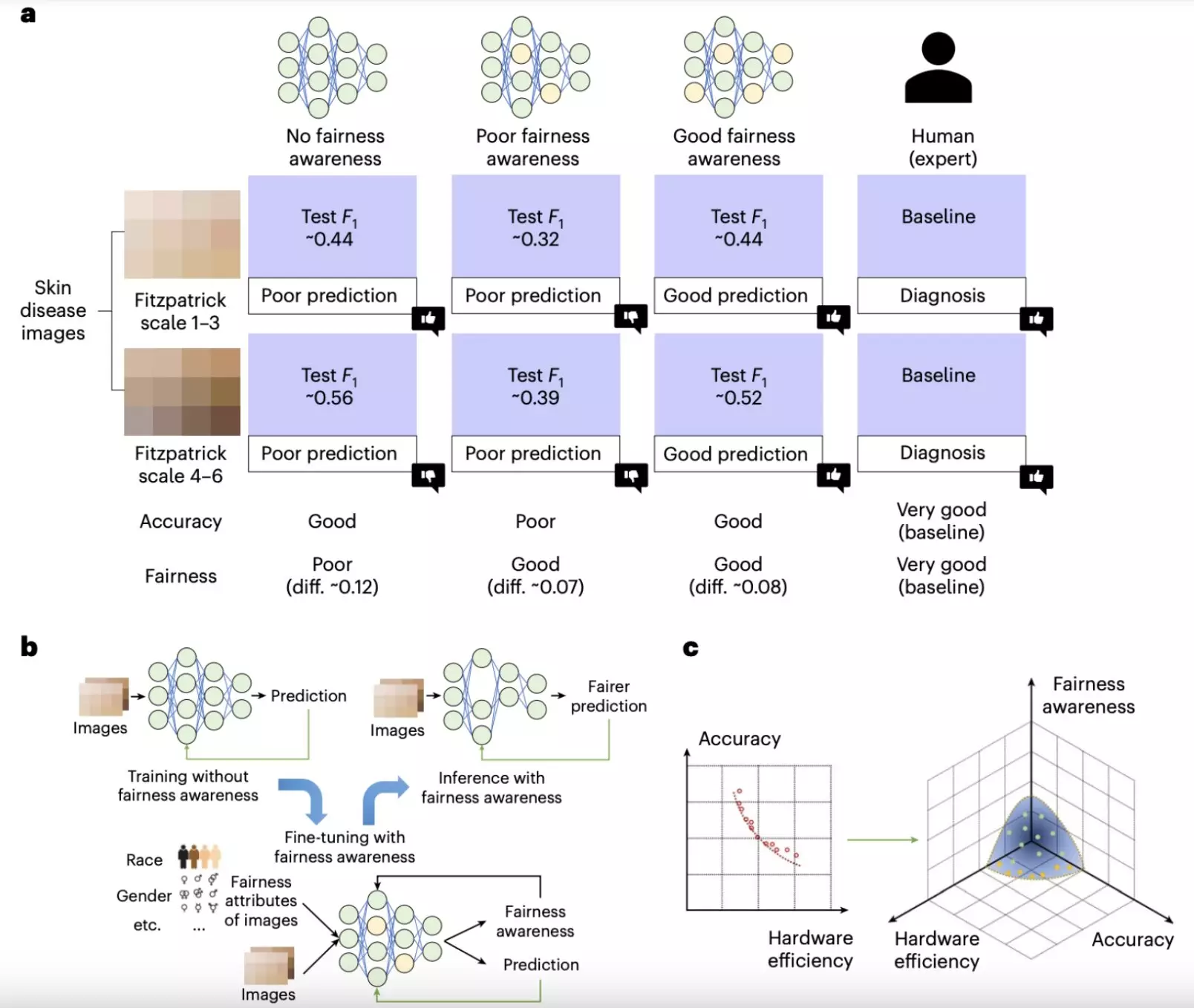

One of the key findings of the study was the impact of hardware-aware neural architecture designs on fairness. The researchers observed that larger and more complex neural networks tended to exhibit greater fairness. However, deploying these models on resource-constrained devices posed significant challenges. To address this, the researchers proposed strategies to compress larger models while maintaining their performance, thus enhancing fairness without imposing high computational demands.

Another set of experiments focused on non-idealities in hardware systems, such as device variability and stuck-at-fault issues. The researchers found that these non-idealities could lead to trade-offs between the accuracy and fairness of AI models. To mitigate these challenges, noise-aware training strategies were suggested, involving the introduction of controlled noise during model training to improve robustness and fairness without increasing computational requirements significantly.

The study highlighted the importance of considering both hardware design and AI model structures to achieve a balance between accuracy and fairness. Larger, more resource-intensive models generally performed better in terms of fairness but required advanced hardware. This underscores the need for developers to prioritize both hardware and software components when designing AI systems for sensitive applications.

Moving forward, the research team plans to delve deeper into the intersection of hardware design and AI fairness. They aim to develop cross-layer co-design frameworks that optimize neural network architectures for fairness while considering hardware constraints. Additionally, adaptive training techniques will be explored to address the variability and limitations of different hardware systems, ensuring fair AI models across diverse platforms.

The study sheds light on the crucial role of hardware in ensuring the fairness of AI models. By considering hardware implications in AI development, developers can create systems that are both accurate and equitable, accommodating users with diverse physical and ethnic characteristics. Future research in this area holds the potential to advance the field of AI fairness and pave the way for innovative hardware solutions designed with fairness as a primary objective.

Leave a Reply