Recent developments in the field of quantum computing have illuminated the path toward more coherent understandings of complex quantum systems. Researchers at the University of Chicago, in collaboration with Argonne National Laboratory, have unveiled a novel classical algorithm that simulates Gaussian boson sampling (GBS) experiments. This breakthrough, published in *Nature Physics*, does more than just demystify some of the intricacies surrounding quantum mechanics; it paves the way for innovative synergies between classical and quantum computing paradigms that could lead to groundbreaking advancements across various scientific fields.

Gaussian boson sampling has emerged as a promising technique for showcasing the potential advantages of quantum computation over traditional methods. Specifically, its goal is to demonstrate quantum advantage, a state wherein quantum computers can outperform classical counterparts in solving specific computationally intensive tasks. Previous experiments have suggested that simulating GBS is substantially challenging for classical computers, particularly in optimal conditions.

However, this narrative becomes more complicated when considering the realities of experimental environments. As Assistant Professor Bill Fefferman articulated, the noise and photon loss inherent in these experiments introduce another layer of complexity that must be addressed. Major theoretical works from teams at institutions such as the University of Science and Technology of China and Xanadu have revealed that these noise factors often render clear claims of quantum advantage questionable. The intersection of such findings has spurred researchers to critically analyze GBS methodologies and their limitations.

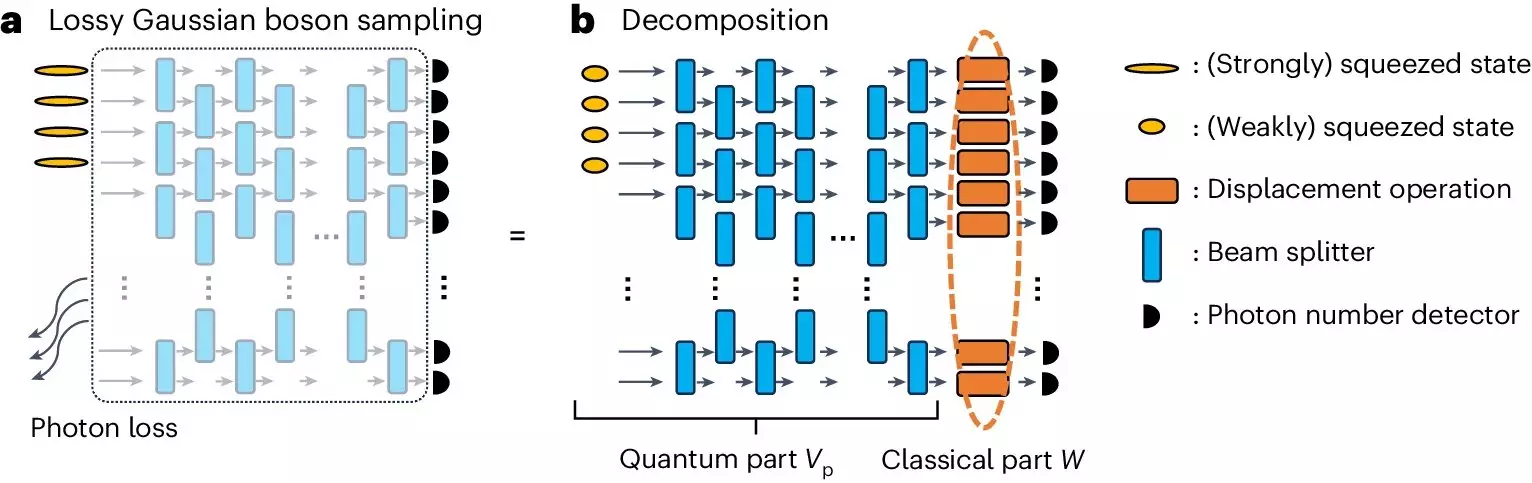

The newly developed algorithm showcases an effective response to these experimental challenges by utilizing the common high photon loss rates seen in GBS experiments. The researchers applied a classical tensor-network approach that strategically capitalizes on the behaviors of quantum states under noisy conditions. The innovative simulation yielded outcomes that remarkably outperformed some cutting-edge GBS experiments during various benchmarks.

Fefferman explains this achievement not as evidence of quantum computing’s inferiority but as a revelation of the opportunities to refine the understanding of quantum capabilities. The new algorithm not only facilitates a more accurate representation of GBS results but also raises critical questions about the validity of claims surrounding quantum supremacy in existing experiments.

Furthermore, findings indicated that enhancing photon transmission rates and employing more squeezed states could significantly elevate the performance of GBS outcomes. This insight has tangible implications for the design of future quantum experiments, inspiring improvements that could increase the practical applications of quantum technologies.

The ramifications of this research extend far beyond the confines of quantum computing. As technologies evolve, the potential applications of quantum computing in fields ranging from cryptography to materials science are substantial. For example, quantum methods could lead to the development of more secure communication protocols, ultimately fortifying data protection against an increasingly fraught digital landscape.

In materials science, quantum simulations could expedite the discovery of novel materials with unique properties. This progress could usher in advancements in various sectors including energy storage and manufacturing processes.

Ultimately, the interplay between quantum and classical computing is crucial in harnessing the strengths inherent within both systems. This collaborative effort will underpin efforts to tackle complex problems across many industries, from optimizing supply chains to enhancing artificial intelligence algorithms.

Building on a Solid Research Foundation

The research team’s previous works have collectively laid a robust foundation for this ongoing examination of quantum systems. Fefferman, in collaboration with Professor Liang Jiang and former postdoc Changhun Oh, has extensively explored the computational abilities of noisy intermediate-scale quantum (NISQ) devices and their limitations. Each successive paper has not only deepened the understanding of photon loss effects but has actively refined the frameworks for simulating GBS and achieving meaningful results amidst complexity.

Their efforts in proposing architectures that improve programmability and resilience against photon loss represent pivotal advancements that make large-scale GBS experiments more feasible. The introduction of quantum-inspired classical algorithms to solve graph-theoretical and quantum chemistry challenges further reinforces the significance of investigating GBS simulations.

Looking Ahead: A Future Shaped by Quantum Insights

The emergence of this classical simulation algorithm reaffirms the need for consistent and rigorous research in both classical and quantum computing domains. By enhancing the simulation of GBS and offering invaluable insights into quantum performance metrics, this advancement acts as a vital bridge toward more significant developments in quantum technologies.

As the landscape of quantum computing continues to evolve, trailing a path rich with potential challenges and opportunities, this research highlights an ongoing journey poised to reshape problem-solving in various sectors worldwide. The exploration of quantum advantage not only encourages academic inquiry but also promises real-world solutions to some of the complex questions facing modern society.

Leave a Reply