On August 1, the European Union ushered in a new era of regulatory oversight with the implementation of the AI Act, a comprehensive framework that governs the development and deployment of artificial intelligence technologies within its borders. As AI systems gain broader applications, the legislation aims to mitigate potential risks associated with these technologies, particularly in sensitive areas like healthcare, hiring, and financial services. The Act not only reflects the EU’s recognition of the potential dangers posed by AI but also aims to protect users from discriminatory practices and harmful consequences.

Despite the sweeping nature of the regulation, many developers may feel bewildered by its applicability to their work. A collaborative study spearheaded by Holger Hermanns, a noted computer science professor at Saarland University, and Anne Lauber-Rönsberg, a legal expert from Dresden University of Technology, explores how the AI Act influences the day-to-day activities of programmers. Their forthcoming research, “AI Act for the Working Programmer,” promises to shed light on key concerns that developers have regarding compliance with the new laws.

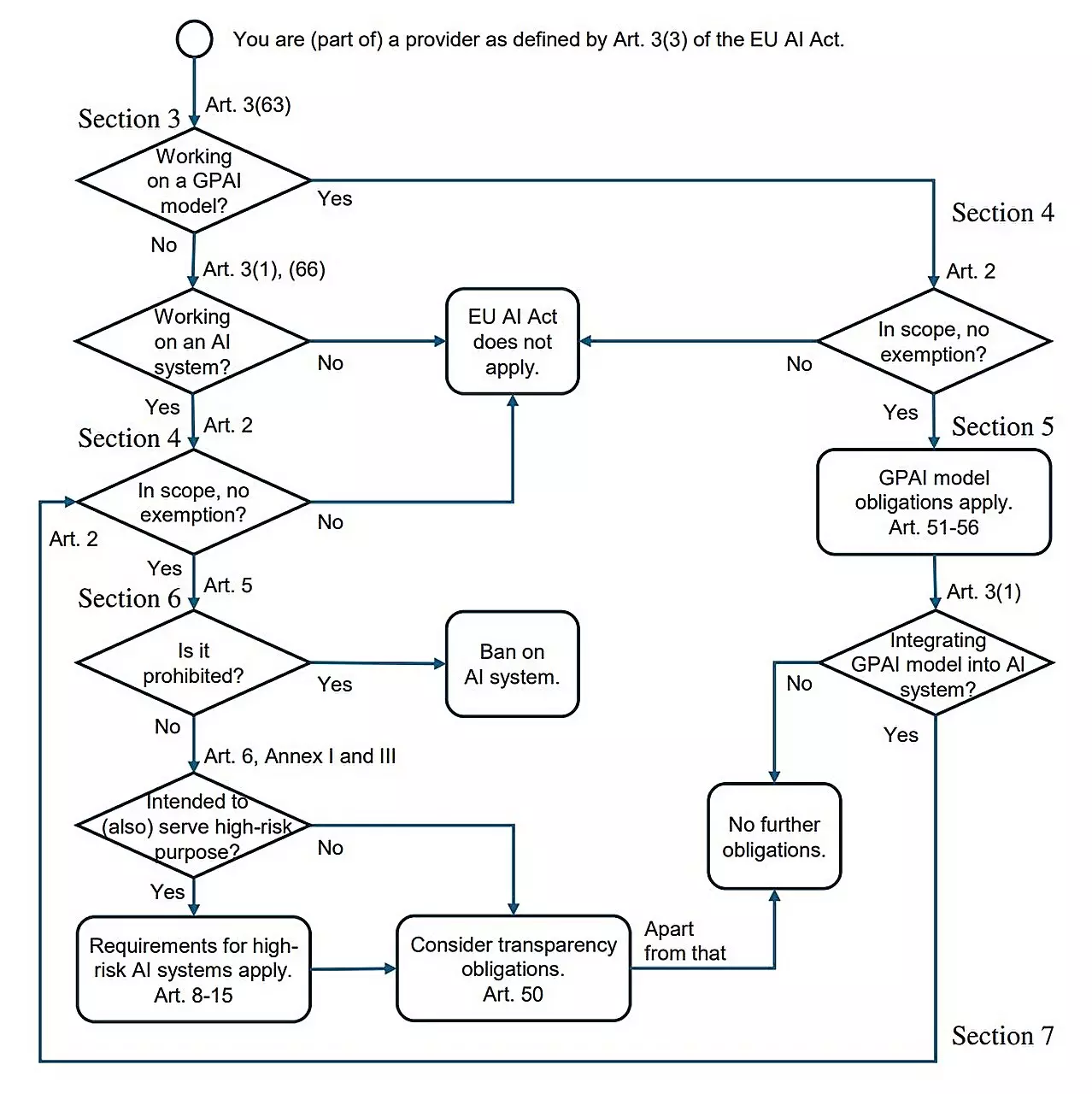

One of the most pressing questions posed by developers is straightforward: “What do I need to know about this legislation to do my job effectively?” With the AI Act spanning 144 pages, the challenge lies in distilling its provisions into actionable insights for those engaged in the practical aspects of AI development. Many programmers are unlikely to delve deep into the document, raising the need for summaries that illuminate the most relevant sections.

The AI Act categorizes systems into various risk levels, defining high-risk systems as those that could significantly affect individuals’ lives, such as algorithmic recruitment tools or medical diagnostics software. A pertinent example offered by Professor Hermanns illustrates this distinction. If a company creates AI for screening job applications, it must adhere to the provisions of the AI Act, ensuring that it does not utilize biased training data that could lead to unjust discrimination against certain applicants.

In contrast, lower-risk AI applications, like algorithms designed for gaming or non-intrusive data processing, remain largely unaffected by the Act. As Hermanns pointed out, these applications can still be developed and deployed without significant regulatory constraints. This structure implies that while the AI Act lays down a stringent framework for high-stakes systems, many programmers can continue their work with minimal disruption.

For those creating high-risk AI systems, the law imposes rigorous requirements that developers must navigate. This includes the necessity for transparent documentation that details how the AI operates, akin to standard user manuals. Developers need to maintain logs that can trace actions and decisions made by the AI, enabling oversight and accountability. The underlying intention is to replicate the principles of safety found in aviation, where every incident is meticulously recorded.

Moreover, developers are tasked with ensuring that their training datasets are diverse and representative to prevent biases from affecting the AI’s outcomes. Compliance will require a proactive approach from programmers, prompting them to incorporate ethical considerations into their design and training methodologies. This shift toward responsible AI development not only aligns with regulatory demands but also resonates with an increasingly conscientious consumer base.

While the AI Act introduces various constraints on high-risk systems, it also preserves freedom for research and development across both public and private sectors. This balance of oversight with innovation aims to maintain Europe’s competitive edge in the global AI landscape. Critics often fear stringent regulations could stifle innovation; however, experts like Hermanns express confidence that the AI Act will not hinder Europe’s progress in AI technology. Instead, it could potentially set a precedent for similar regulatory frameworks in other regions.

As developers integrate these legal considerations into their workflows, they will likely contribute to the emergence of safer and more ethically produced AI systems. The European AI Act thus stands as a pivotal development—pioneering a legal structure that balances risk management with the forward momentum of technological advancement. It empowers programmers, enabling them to develop artificial intelligence that aligns with societal values and needs, fostering an environment of trust in AI technologies.

The European Union’s AI Act marks a crucial step towards responsible management of artificial intelligence. By clarifying the implications for programmers and focusing on the significance of risk assessment, the Act endeavors to navigate the complex landscape of AI technology. As the regulatory framework evolves, the future of AI development will hinge not just on compliance but on the shared commitment to ethical innovation that benefits society as a whole. In doing so, the European AI Act stands to create a sustainable paradigm for the future of technology.

Leave a Reply