In the dynamic landscape of artificial intelligence, particularly in the realm of natural language processing, collaboration stands as a pillar of innovation. Just as a team of professionals might pool their expertise to tackle complex problems, newly developed algorithms can facilitate a similar process between various language models. The recently introduced Co-LLM algorithm by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) embodies this collaborative principle, significantly improving the accuracy and efficiency of large language models (LLMs).

The limitations of general-purpose LLMs have become increasingly apparent. While these models are versatile, they often struggle with niche topics requiring deep expertise. When confronted with complex inquiries, such as medical or scientific queries, a general LLM may provide a surface-level response that lacks the precision needed for practical applications. This is where cross-model collaboration can take center stage.

Imagine asking an LLM to explain the intricacies of a specific pharmaceutical compound. The general model might deliver a vague answer riddled with inaccuracies. In a human context, one would likely reach out to a pharmacist or medical professional for clarity. Co-LLM mimics this decision-making process within the digital domain, enabling a general model to ‘phone a friend’ — a specialized model trained on domain-specific knowledge — to refine its output.

The Mechanics of Co-LLM

At the core of the Co-LLM framework lies the concept of a “switch variable,” acting as a project manager within the algorithm. This variable evaluates the efficacy of each word (or token) generated by the general model. When a token appears less reliable, the switch assesses whether the specialized model might provide a more accurate alternative.

For instance, consider a question about the biodiversity of extinct bear species. As the general-purpose LLM constructs its answer, the switch variable identifies phrases where additional expertise would be beneficial—perhaps inserting the year of extinction for accuracy. This level of nuanced interaction allows Co-LLM to not only enhance the responses but also propagates a learning environment where both models evolve through their collaborations.

The versatility of Co-LLM shines through its adaptability across various fields. During experiments, the MIT researchers successfully utilized domain-specific datasets, like BioASQ for biomedical inquiries, to train the LLM on how to locate and rectify its shortcomings. This means that whether answering a medical question or solving a complex mathematical problem, Co-LLM can seamlessly switch between models, ensuring responses are grounded in specialized knowledge.

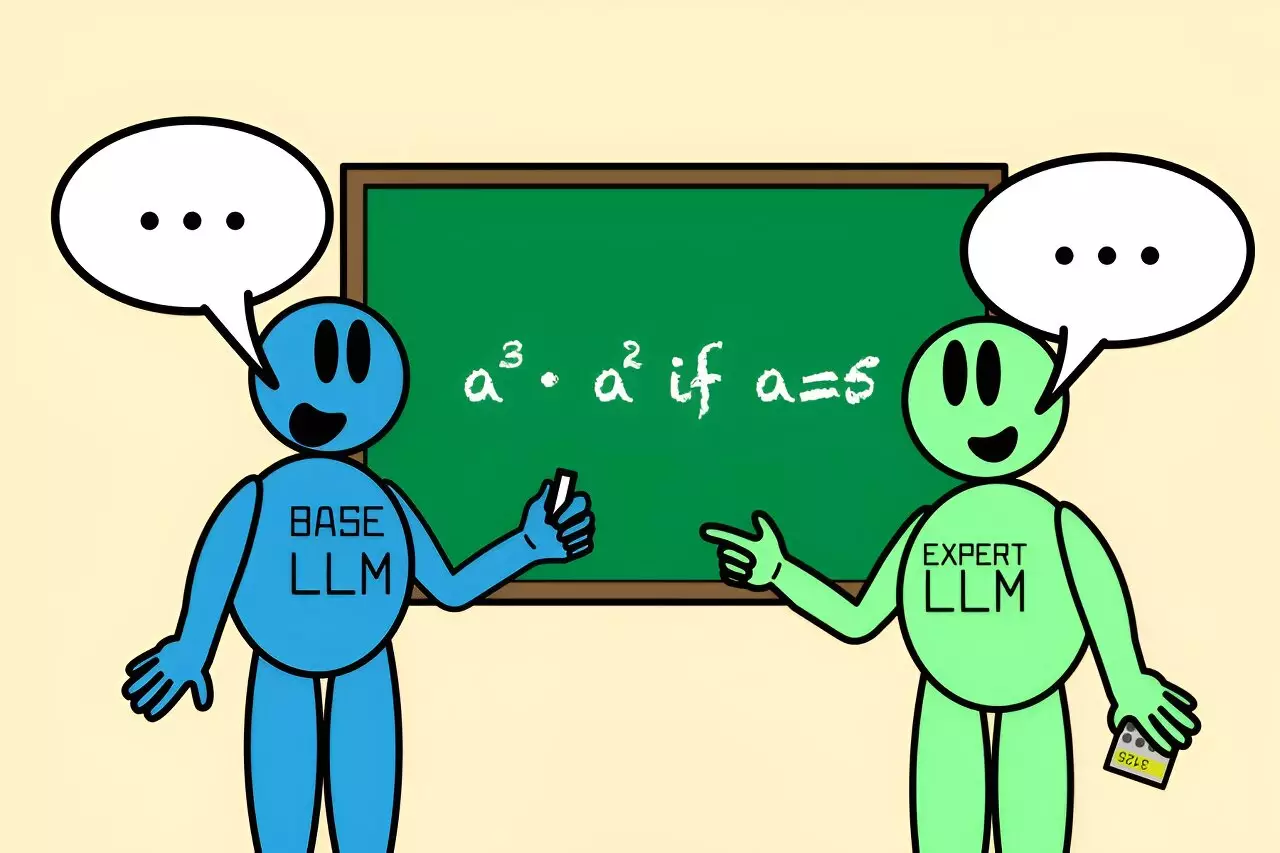

In a practical demonstration, when tasked with a mathematical calculation such as “a³ · a² if a=5,” a simplistic LLM might erroneously conclude the answer as 125. However, with the intervention of an expert model like Llemma, Co-LLM collaborated effectively to refine the output to 3,125. This not only underscores the superiority of Co-LLM over traditional LLMs but illustrates how collaborative algorithms can outperform both finely tuned and uninformed models independently tackling the same problem.

One distinguishing feature of Co-LLM is its ability to efficiently allocate computational resources. Unlike models constrained by the need for simultaneous operation, Co-LLM activates the specialist model selectively. This token-wise activation conserves processing power while still elevating the quality of information delivered, a critical advantage in scenarios demanding quick, accurate information retrieval.

The enhanced efficiency is a significant departure from previous methods, such as “Proxy Tuning,” which require all models involved to be trained in tandem. By leveraging a more organic collaboration mechanism, Co-LLM unlocks new potentials in real-time information processing.

The researchers’ vision for Co-LLM extends beyond its current implementations. Future enhancements may include a sophisticated self-correction mechanism informed by human feedback, allowing for real-time adjustments when the specialized model fails to deliver a valid response. Furthermore, the team seeks to refine the general model in tandem with new expert model data, thereby keeping the knowledge base relevant and updated.

Such forward-thinking applications could see Co-LLM assist in generating enterprise documents or improving specialized, private models that remain secure within their operating environments. This adaptability not only furthers the capabilities of LLMs but also offers real-world applicability where accuracy and efficiency are paramount.

Co-LLM represents a paradigm shift in how LLMs can be orchestrated to share knowledge effectively. By mimicking human collaborative behavior, this algorithm offers a refreshing take on overcoming the limitations of traditional models. As researchers continue to refine the algorithm and explore new avenues of integration, the promise of Co-LLM stands to redefine the future of language processing, inviting a more nuanced approach towards artificial intelligence that prioritizes both accuracy and efficiency.

Leave a Reply