The rapid proliferation of deep learning tools across various sectors, including healthcare and finance, underscores their transformative potential. However, the reliance on computationally intensive models brings forward significant security challenges, particularly in safeguarding sensitive information during cloud-based processing. Researchers at MIT have risen to this challenge by developing a novel security protocol that employs quantum principles to ensure the integrity and confidentiality of data during deep learning applications.

In recent years, the integration of artificial intelligence (AI) into critical domains has sparked a surge of innovation. Yet, as the capabilities of models like GPT-4 grow, so does their demand for substantial computational resources, which are predominantly hosted on cloud servers. This reliance on external servers raises alarms regarding data privacy, particularly in sensitive contexts such as healthcare, where organizations may be reluctant to expose patient information. The MIT researchers’ protocol represents a groundbreaking intersection of quantum mechanics and deep learning, utilizing the unique properties of light to create secure data transmissions during computational tasks.

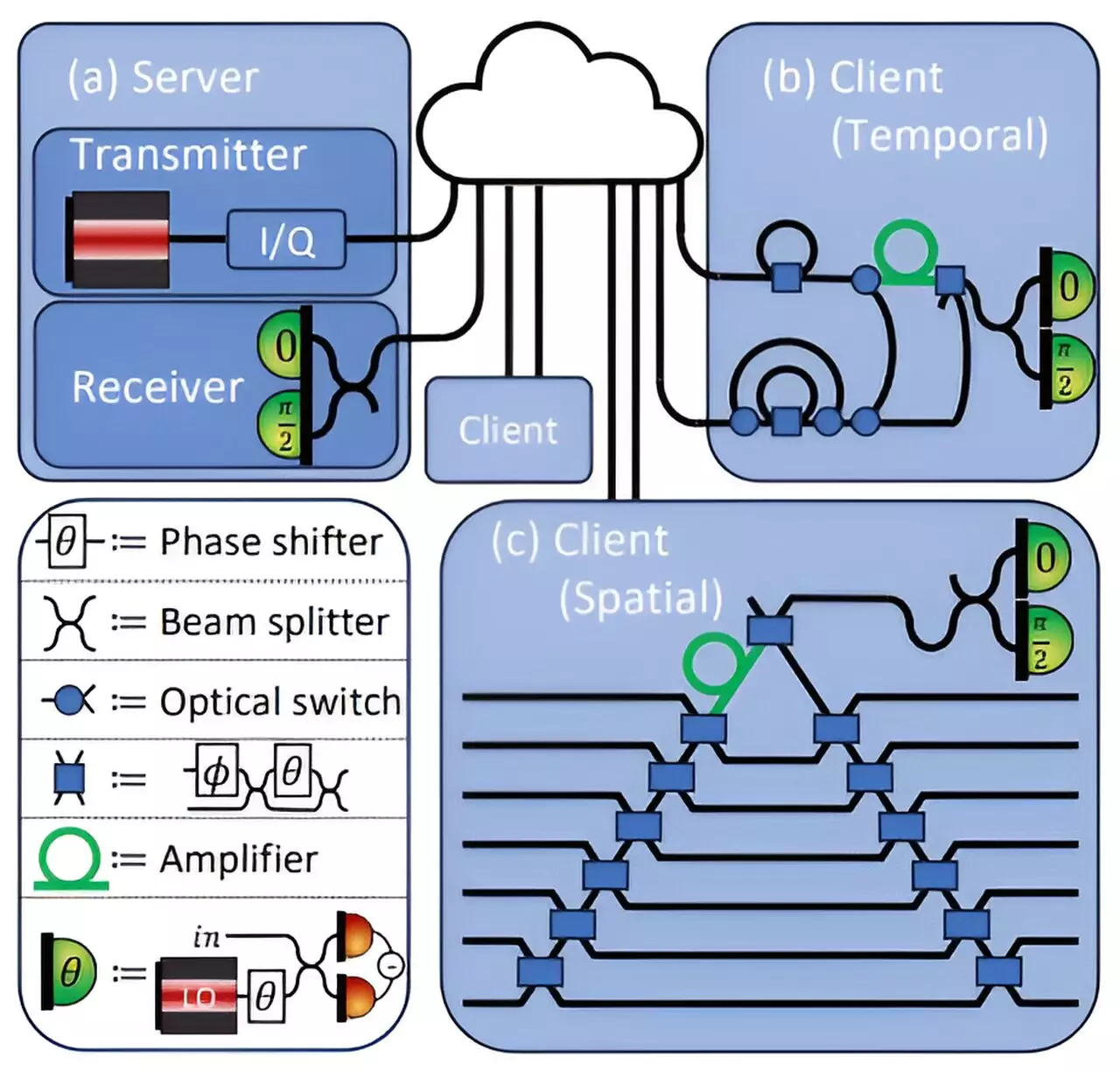

The protocol developed uses a technique that embeds data within the laser light associated with fiber optic communication systems. By doing so, the researchers leverage the fundamental no-cloning principle of quantum mechanics, which states that quantum information cannot be duplicated perfectly. This revolutionary approach not only ensures that the data transmitted remains confidential but also maintains the accuracy and integrity of deep learning computations.

To illustrate the necessity of this protocol, consider the scenario of a healthcare provider analyzing medical images. Here, data confidentiality is paramount; hospitals must balance the imperative of proper patient care with the obligation to protect sensitive information. The MIT study focuses on a model where two parties—the client (the healthcare provider) and the server (which runs deep learning processes)—interact without compromising their respective sensitive information. The protocol allows the healthcare provider to utilize the server’s deep learning capabilities while safeguarding patient data.

As models are trained using vast amounts of data, it is crucial that achieving predictive accuracy does not come at the expense of data security. The MIT team demonstrated that their protocol achieves an impressive accuracy rate of 96% across deep-learning applications, all while providing robust safeguards against potential data breaches. This balance is essential not only for the integrity of the model but also for maintaining the trust of users and clients relying on these technologies.

The MIT researchers’ protocol is ingeniously constructed. It allows the data’s sensitive nature to be shielded as it travels to the cloud server for analysis. The server encodes the parameters of the neural network into an optical field and sends this data to the client, who can only interact with the information necessary to perform computations. Crucially, the protocol ensures that the client cannot extract more information than intended, thereby significantly minimizing the risk of data leaks.

The innovative use of residual light measurement plays a critical role here. The client transmits back the light not used for their tasks, allowing the server to measure any discrepancies and identify possible security breaches. This dynamic creates a security layer that monitors both the client’s and server’s data with exceptional precision, as the quantum properties of light act as a bulwark against potential threats.

Looking ahead, the potential applications of this quantum security protocol extend well beyond healthcare. The research team envisions a broad spectrum of use-cases, including federated learning, which involves multiple parties collaborating to build a comprehensive deep-learning model while preserving the confidentiality of individual datasets. This method could revolutionize industries where data sharing is often impeded by privacy concerns.

The goal is not just to enhance the security of deep learning models but to integrate quantum operations into emerging AI technologies. This fusion of deep learning and quantum key distribution presents an intriguing path forward, offering new avenues for privacy-preserving mechanisms in diverse applications. Researchers and industry experts are keen to see how this protocol holds up under experimental conditions and its potential for real-world applications.

As we delve deeper into the realm of AI and quantum technologies, the MIT researchers’ work highlights a significant step toward addressing the critical security issues confronting cloud-based deep learning systems. Their protocol offers a multifaceted solution that simultaneously preserves privacy, maintains computational integrity, and challenges conventional approaches to data security. As these technologies continue to evolve, the implications for various sectors could be both profound and transformative, ushering in a new era of secure, intelligent systems.

Leave a Reply