Weather forecasting is not merely a matter of predicting rain or sunshine; it plays a pivotal role in a myriad of sectors vital to society. Whether in agriculture, transportation, or energy, accurate weather predictions influence decisions that drive the U.S. economy. Industries such as aviation and shipping rely heavily on precise forecasts to manage operations and mitigate risks. The consequences of inaccurate forecasts can be catastrophic, leading to financial losses, safety hazards, and inefficiencies. Given the extensive implications, it is paramount that we elevate the standards of weather modeling.

The Limitations of Traditional Models

For decades, meteorologists have relied on numerical weather prediction models based on complex equations involving thermodynamics and fluid dynamics. While these models have advanced, they come with a hefty computational footprint, requiring vast supercomputers to churn through data. Their nature inherently limits the frequency and breadth of updates, which is a substantial drawback in an environment as volatile as our atmosphere. The constant race for higher resolution data to improve forecast accuracy has made these models prohibitively expensive for continual, real-time predictions. Consequently, this paradigm of forecast modeling is ripe for disruption—a disruption that artificial intelligence promises to deliver.

AI Foundation Models: A Paradigm Shift

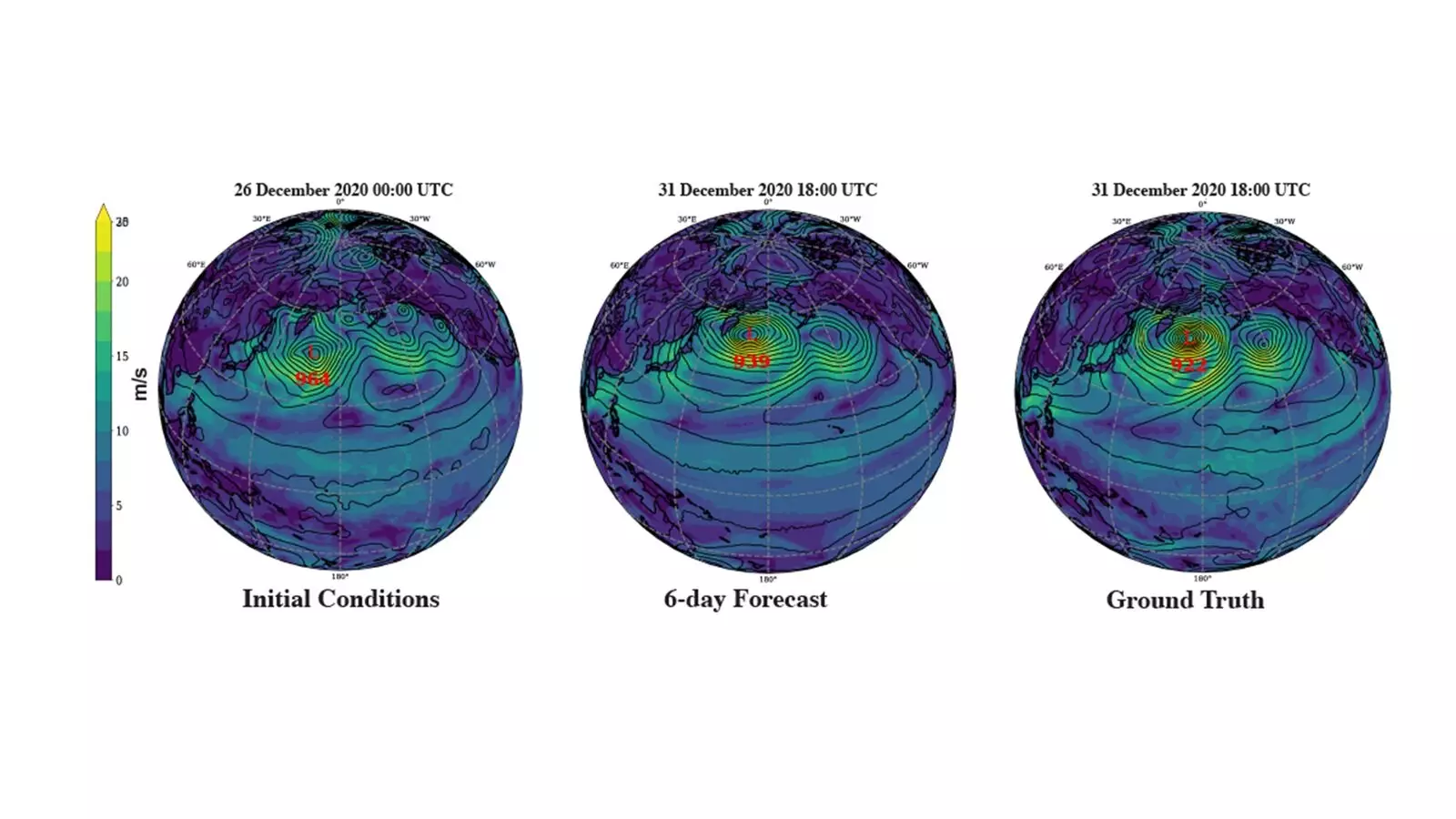

Recent developments from researchers at Argonne National Laboratory and their collaborators signal a transformative shift in how we approach weather forecasting. Utilizing foundation models—large-scale AI systems traditionally employed in natural language processing—these scientists are exploring an innovative framework specifically for meteorology. The genius of this approach lies in its use of tokens, not as words, but as small segments of visual atmospheric data like temperature and humidity charts. This methodological pivot allows AI to interact with spatial-temporal datasets in a ground-breaking manner, generating accurate predictions at a fraction of the traditional computational expense.

Sandeep Madireddy, a computer scientist at Argonne, articulated the significance of this transition: “Instead of being interested in a text sequence, you’re looking at spatial-temporal data, which is represented in images.” By moving from high-resolution data to more manageable information packets, these models glean insights without being laden by the computational demands of traditional methods. This approach has the potential to yield forecasts that rival, if not surpass, those generated from conventional high-res models.

Breaking Down Barriers: The Philosophy of Low-Resolution Data

The philosophy behind forecasting has and continues to be the quest for higher resolutions, often neglecting the treasures hidden within coarser data systems. Rao Kotamarthi from Argonne points out that “we’re actually able to get comparable results to existing high-resolution models even at coarse resolution with the method we are using.” This realization is a game changer, allowing for more rapid advancements in forecasting technology while also easing the financial burden on research institutions. Such breakthroughs could democratize weather forecasting, making accurate predictions accessible even in less-resourced settings.

However, while this innovation brings promising near-term weather forecasting capabilities, the broader horizon of climate modeling presents an entirely different set of challenges. Climate modeling necessitates a longitudinal understanding of weather patterns, making it infinitely more complex than daily forecasts.

Challenges in Climate Modeling and the Path Forward

The pursuit of climate modeling using AI foundation models is tempting; however, it comes with significant challenges. Kotamarthi articulates that the private sector is more incentivized to innovate in weather forecasting than in climate modeling. This is partially due to the immediate commercial applications and pressures associated with accurate weather predictions. On the other hand, climate modeling requires a level of commitment that transcends immediate profit motives, often leading to research being funneled through national labs and academic institutions.

Argonne environmental scientist Troy Arcomano emphasizes the complications posed by our changing climate: “We’ve gone from what had been a largely stationary state to a non-stationary state.” This shift renders traditional statistical models inadequate, necessitating the development of sophisticated AI that can adapt to evolving realities.

Advancements with Exascale Computing

The introduction of Argonne’s new exascale supercomputer, Aurora, represents a monumental leap in AI research capabilities. As Kotamarthi states, “We need an exascale machine to really be able to capture a fine-grained model with AI.” This powerful computational tool will enable researchers to explore high-resolution AI-based models capable of adapting to the fluid dynamics of climate systems.

The recognition of this work through awards, such as the Best Paper Award at the “Tackling Climate Change with Machine Learning” workshop, further underscores its importance and the growing interest in leveraging advanced computational methods for real-world problems.

As we stand on the cusp of potentially revolutionary advancements in weather forecasting and climate modeling, the interplay between AI and atmospheric sciences offers a beacon of hope. The precision, accessibility, and adaptability that AI presents can redefine how we interact with and respond to the changing climatic landscape, pushing boundaries and reshaping paradigms for future generations.

Leave a Reply