The research conducted by a team of Alberta Machine Intelligence Institute (Amii) researchers sheds light on a critical challenge in the realm of machine learning – the loss of plasticity in deep continual learning. This issue poses a significant barrier to the development of advanced AI systems capable of adapting effectively in real-world scenarios. The team’s paper, titled “Loss of Plasticity in Deep Continual Learning,” published in Nature, delves into the underlying problem that many deep learning agents face during continual learning processes. The team, consisting of researchers like Shibhansh Dohare, J. Fernando Hernandez-Garcia, Qingfeng Lan, Parash Rahman, A. Rupam Mahmood, and Richard S. Sutton, highlights the detrimental impact of the loss of plasticity on the performance and learning ability of AI agents.

According to the researchers, loss of plasticity not only hinders the AI agents’ capacity to acquire new knowledge but also affects their ability to retain previously learned information. This phenomenon, akin to the brain’s ability to adapt and form new neural connections, represents a major obstacle in developing AI systems capable of dealing with the complexities of the real world. Existing models, such as ChatGPT, are ill-equipped for continual learning, as they are typically trained for a fixed period and deployed without further learning. This limitation severely restricts the adaptability of AI systems in environments that require constant learning, like financial markets.

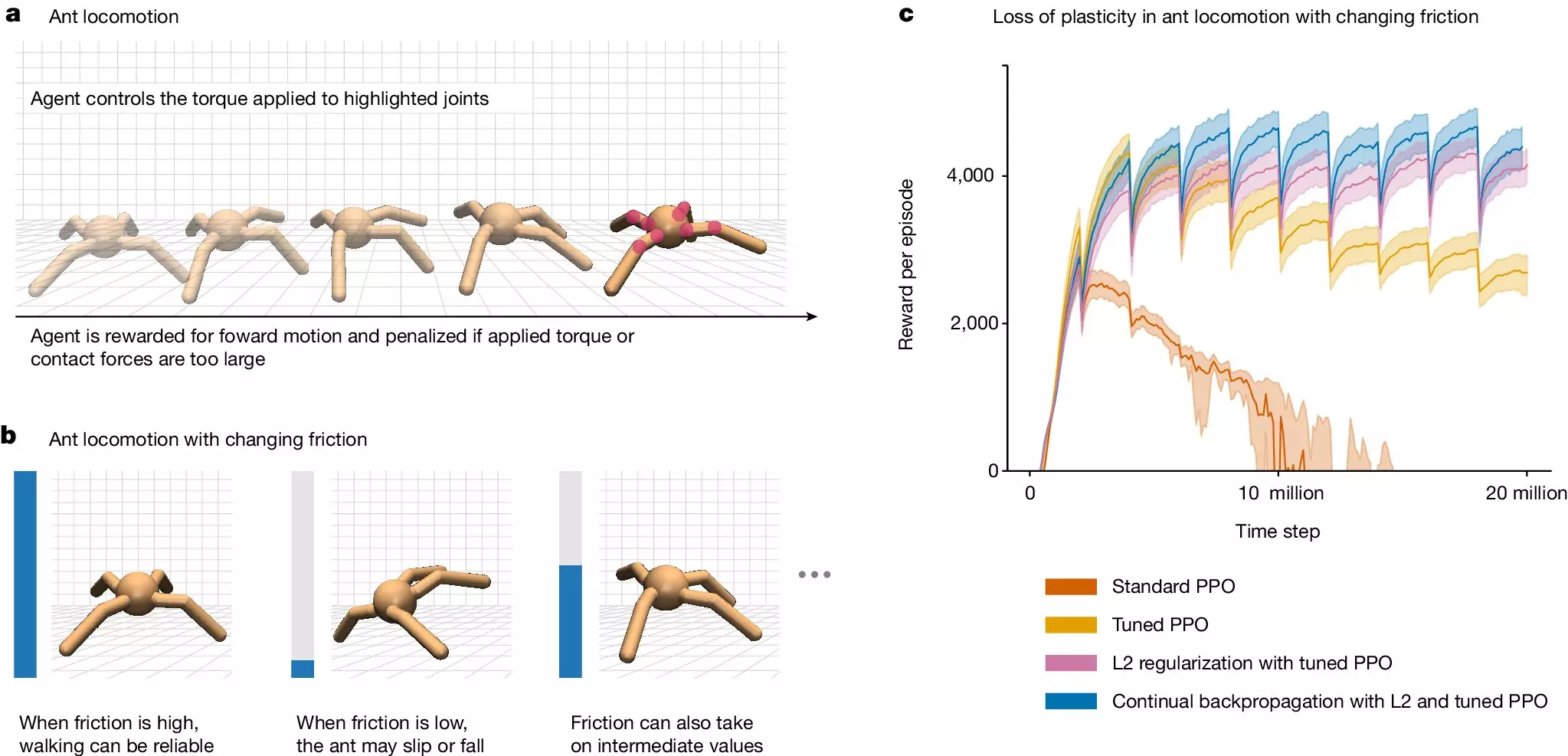

The research team conducted experiments to pinpoint the extent of the loss of plasticity in deep learning systems. By training networks in sequences of classification tasks and observing the decline in performance over successive tasks, they demonstrated the widespread nature of the problem. The next crucial step was to devise a solution to combat loss of plasticity in continual deep-learning networks. The team experimented with a modified method called “continual backpropagation,” which aims to reinitialize inactive units periodically during the learning process. This approach showed promising results in enabling models to continue learning indefinitely, highlighting the potential for addressing the inherent challenges in deep learning networks.

While the team’s work represents a significant breakthrough in tackling loss of plasticity, further research and innovation are necessary to enhance the performance and adaptability of AI systems. By shedding light on the fundamental issues within deep learning, researchers are paving the way for future developments in the field. The acknowledgment of these challenges is crucial for advancing AI capabilities and overcoming obstacles that impede progress. With continued efforts to address loss of plasticity and enhance deep learning algorithms, the future of AI holds tremendous promise for creating more intelligent and adaptive systems.

The research on loss of plasticity in deep continual learning underscores the importance of addressing fundamental issues within AI systems. By identifying and mitigating challenges like loss of plasticity, researchers can pave the way for more advanced and adaptive AI solutions. The ongoing evolution of deep learning algorithms and methodologies is crucial for unlocking the full potential of AI technology and driving innovation in the field.

Leave a Reply