The field of speech emotion recognition (SER) is gaining traction, fueled by advancements in artificial intelligence and deep learning. This technology aims to decipher human emotions through speech, with applications spanning from customer service enhancements to mental health monitoring. The integration of deep learning frameworks, particularly convolutional neural networks (CNNs) paired with long short-term memory (LSTM) networks, has demonstrated immense potential in improving the accuracy of SER systems. However, the very same innovations that propel the field forward also introduce significant vulnerabilities, making these systems susceptible to malicious interventions.

Recent research conducted by a team at the University of Milan sheds light on the alarming susceptibility of SER models to adversarial attacks. These attacks involve strategically perturbed inputs designed to trick deep learning models into making incorrect predictions. The study harnessed the power of various attack methodologies, including both white-box and black-box techniques. The implications of these findings are profound, as they underline a serious consideration for developers and researchers engaged in creating emotionally intelligent systems.

The evaluation involving multiple datasets, including EmoDB for German, EMOVO for Italian, and RAVDESS for English, revealed a troubling trend: all forms of adversarial attacks significantly degraded the model performance. A standout concern was the superiority of black-box attacks like the Boundary Attack. The researchers noted that these attacks, executed with minimal knowledge of the model’s internal mechanics, still managed to induce substantial miscalculations in prediction. Such revelations point to a catastrophic reality, where attackers can exploit SER systems without requirement of sophisticated insights into how these models operate.

Delving deeper, the study incorporated a gender-focused analysis, examining how adversarial attacks influenced male and female speech across different languages. Interestingly, while minor performance differences emerged, the overall results underscored the resilience of the models against adversarial perturbations in diverse linguistic contexts. English was indicated as the most vulnerable language, whereas Italian showed more robustness against these attacks. The analysis also indicated a subtle tendency for male samples to endure slightly less disruption compared to female counterparts, although the discrepancies remained marginal.

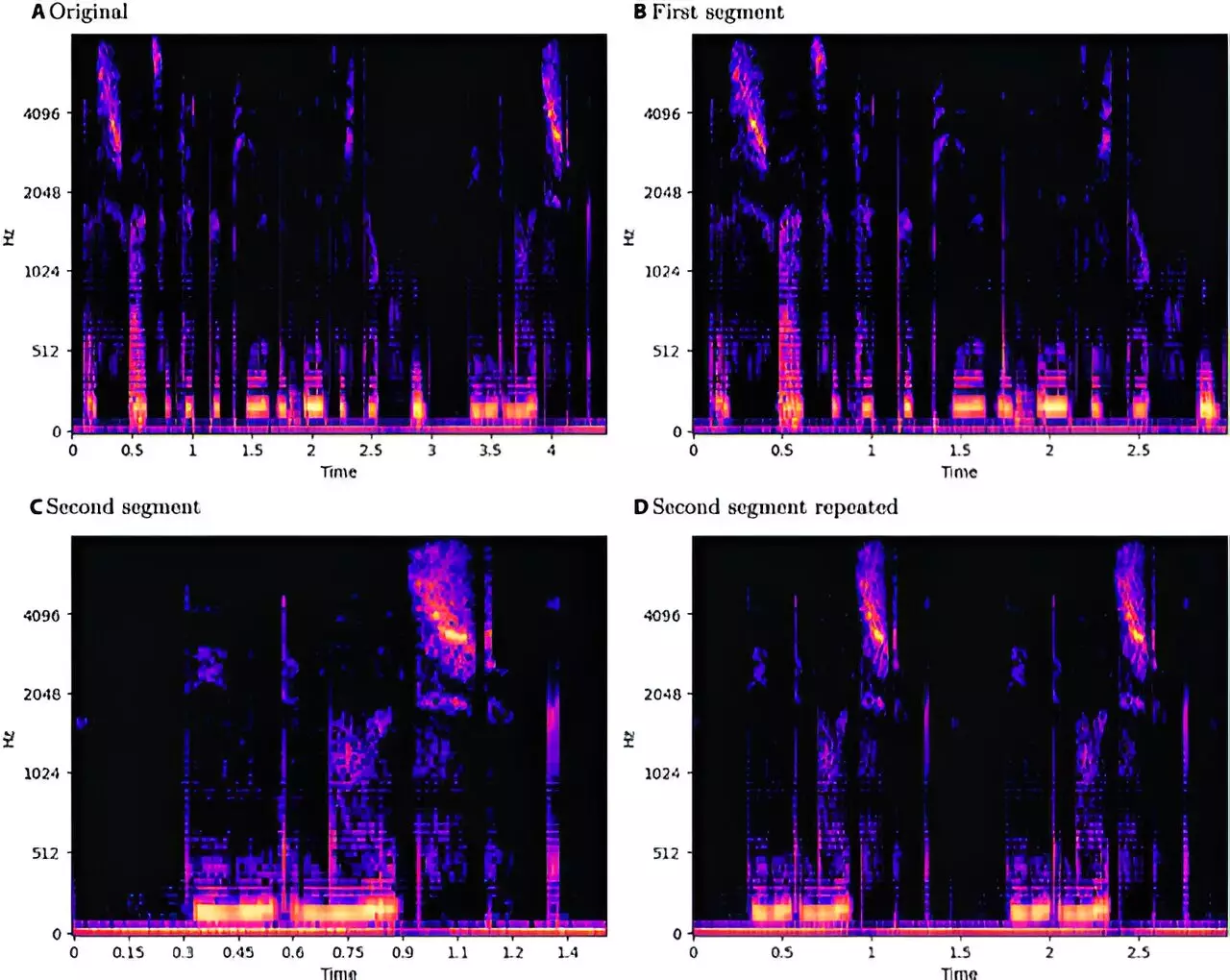

The team’s methodology ensured consistency by employing a standardized approach to dataset augmentation through techniques such as pitch shifting and time stretching, all within a three-second threshold. This controlled environment was crucial in facilitating a fair comparison across the varied datasets, yet it also highlighted questions about the inherent limits of SER systems across different demographic groups.

While discussions around vulnerabilities in technology often raise concerns about potential exploitation, the researchers argued for transparency as a pathway to security. They contended that withholding this vital information could be more hazardous than making vulnerabilities public. By openly sharing findings from their research, they aim to empower both system developers and users to better defend against adversarial threats. Enhanced understanding of weaknesses fosters an environment of proactive defense rather than reactive measures.

Moreover, the researchers encouraged the establishment of best practices for the deployment of SER models, particularly in sensitive applications such as mental health support and surveillance systems. They advocated for ongoing vigilance and continual improvement within the security frameworks that uphold these emotional recognition technologies.

As the demand for emotionally intelligent systems continues to grow, addressing the vulnerabilities unveiled in speech emotion recognition models will be paramount. The intersection of deep learning and cybersecurity represents not just a challenge, but also a unique opportunity for innovation. By fostering a culture of transparency and collaboration, researchers can equip themselves and practitioners with the tools necessary to defend against adversarial attacks effectively. Ultimately, a secure technological landscape can be forged, ensuring that the significant promise of speech emotion recognition is not overshadowed by its vulnerabilities. By remaining proactive and committed to security, stakeholders can facilitate the growth of robust systems that genuinely enhance the human experience.

Leave a Reply