In today’s technologically driven world, the intersection of artificial intelligence (AI) and agriculture is becoming increasingly significant. More specifically, the capacity to assess food quality using machine learning is a growing area of research that aims to enhance consumer experiences and improve food processing. Despite considerable advancements in this domain, there still exists a noticeable gap between the innate adaptability of humans in judging food quality and the current capabilities of machine-learning models. This article delves into a recent study conducted by the Arkansas Agricultural Experiment Station that aims to bridge this gap by leveraging human perception to bolster the efficacy of machine vision systems in assessing food quality.

The study, led by Dongyi Wang, an assistant professor specializing in smart agriculture and food manufacturing, examines how machine-learning models can better mimic human assessments of food quality. Traditional models have struggled with consistency, particularly when multiple environmental variables, like lighting conditions, come into play. While human judgment can be swayed by a plethora of factors, varying illumination can significantly affect visual assessments. This inconsistency among human observers has historically posed a challenge for machine learning algorithms, which rely on ‘ground truths’— data points that they are trained against.

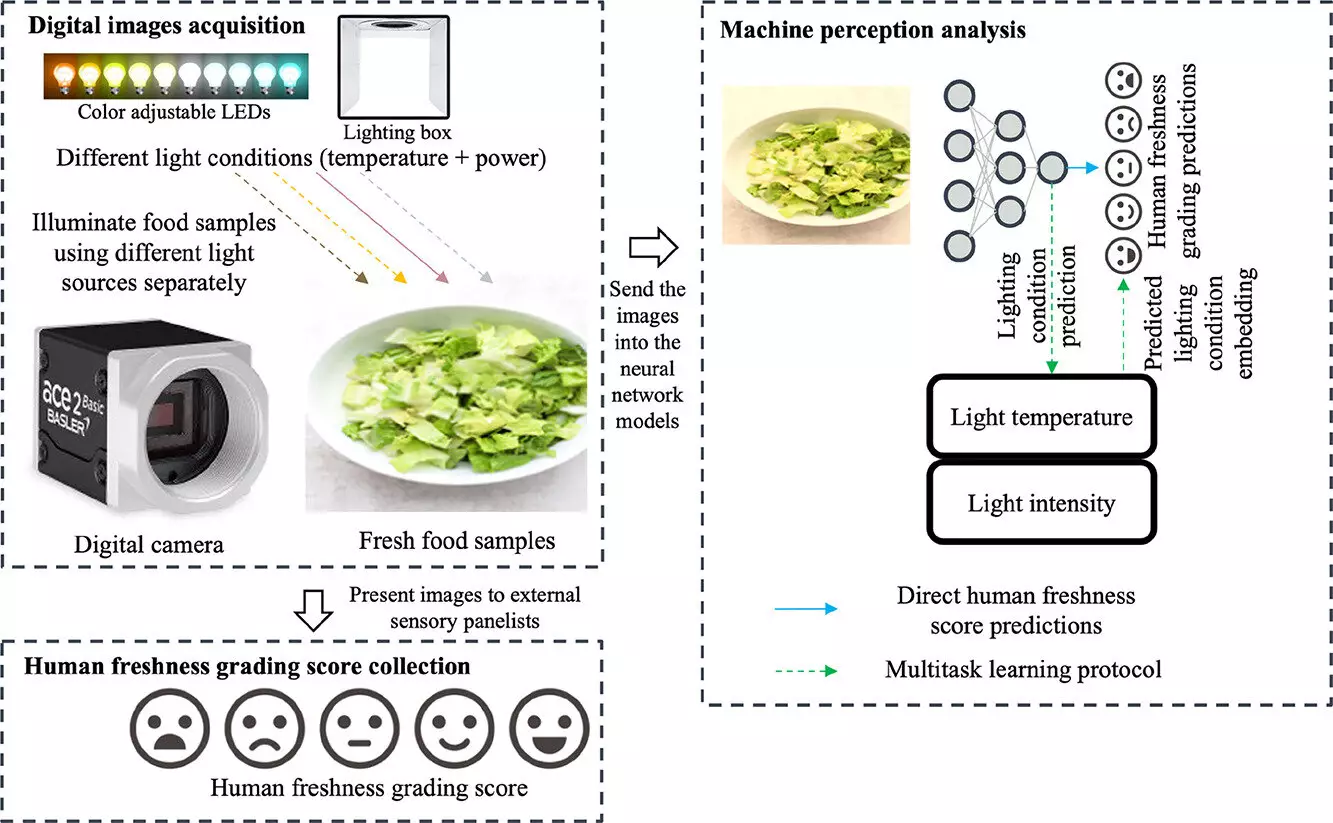

The primary purpose of the research is to refine these algorithms by incorporating data from human assessments conducted under varying illumination conditions. This method endeavors to enhance the reliability of machine-learning predictions, thereby addressing a long-standing concern within the field.

Methodology: An In-Depth Analysis

To investigate the effect of lighting on human perception, the researchers utilized Romaine lettuce as a test subject. This vegetable is particularly prone to browning, making it an ideal candidate for study. The sensory analysis involved 109 participants spanning a range of ages, who evaluated images of Romaine lettuce over five consecutive days. Each participant graded the freshness of the lettuce images on a scale of zero to 100, offering valuable insights into how perception may shift under different lighting scenarios.

The study gathered a robust dataset consisting of 675 images, each taken under varying color temperatures and brightness levels. This attention to detail allowed the researchers to focus on specific variations in light that could potentially affect human perception. This nuanced approach is essential, as prior studies have often overlooked illumination’s impact on sensory evaluations, leading to flawed assumptions in model training.

Findings: The Impact of Human Perception

The results revealed a noteworthy finding: integrating human perceptual data reduced the prediction errors of machine-learning models by approximately 20%. This advancement outperformed existing algorithms that did not account for human variability. The study highlighted a significant discrepancy in existing machine-learning models, which had largely ignored the complexities and subtleties intrinsic to human evaluative processes.

Given the complexity of human judgment influenced by environmental conditions, training computer models to incorporate these findings could revolutionize how food quality is assessed in the future. By fine-tuning algorithms to reflect human perspectives, the research team hopes to create systems that deliver more accurate and reliable predictions, thus enhancing overall consumer satisfaction.

Broader Implications for the Food Industry

The implications of this research extend beyond mere food quality assessment. The insights gained from this study could also inform grocery store practices regarding product presentation. Imagine a grocery store using machine learning algorithms that are attuned to how consumers perceive food quality. Enhanced technologies could aid in displaying fruits and vegetables in a manner that highlights their freshness, subsequently improving the shopping experience.

Moreover, Wang envisions the application of this research model across various industries, extending its utility to food processing, jewelry evaluation, and more. As industries increasingly turn towards automation for efficiency and accuracy, the integration of human perception into machine learning can serve as a blueprint for future innovations.

This study exemplifies the collaborative nature of modern research, involving contributions from experts across multiple departments within the University of Arkansas. By combining insights from food science, engineering, and computer sciences, this study emerges as a quintessential example of interdisciplinary collaboration aimed at tackling complex challenges in food quality assessment.

As machine learning continues to evolve, the integration of human perceptual data stands to revolutionize how we assess food quality. By recognizing the inherent limitations of traditional AI models and capitalizing on the adaptability of human observers, researchers like Wang are paving the way for smarter, more effective food quality evaluations—ultimately catering to both consumer needs and industry standards.

Leave a Reply