Self-driving cars have made significant strides in recent years, but they still face challenges when it comes to processing static or slow-moving objects in 3D space. This limitation stems from the fact that their visual systems lack the depth perception needed to navigate complex environments. Insects, on the other hand, have developed sophisticated visual systems that allow them to perceive depth and motion with great accuracy. Praying mantises, in particular, possess a unique form of binocular vision that enables them to see the world in three dimensions.

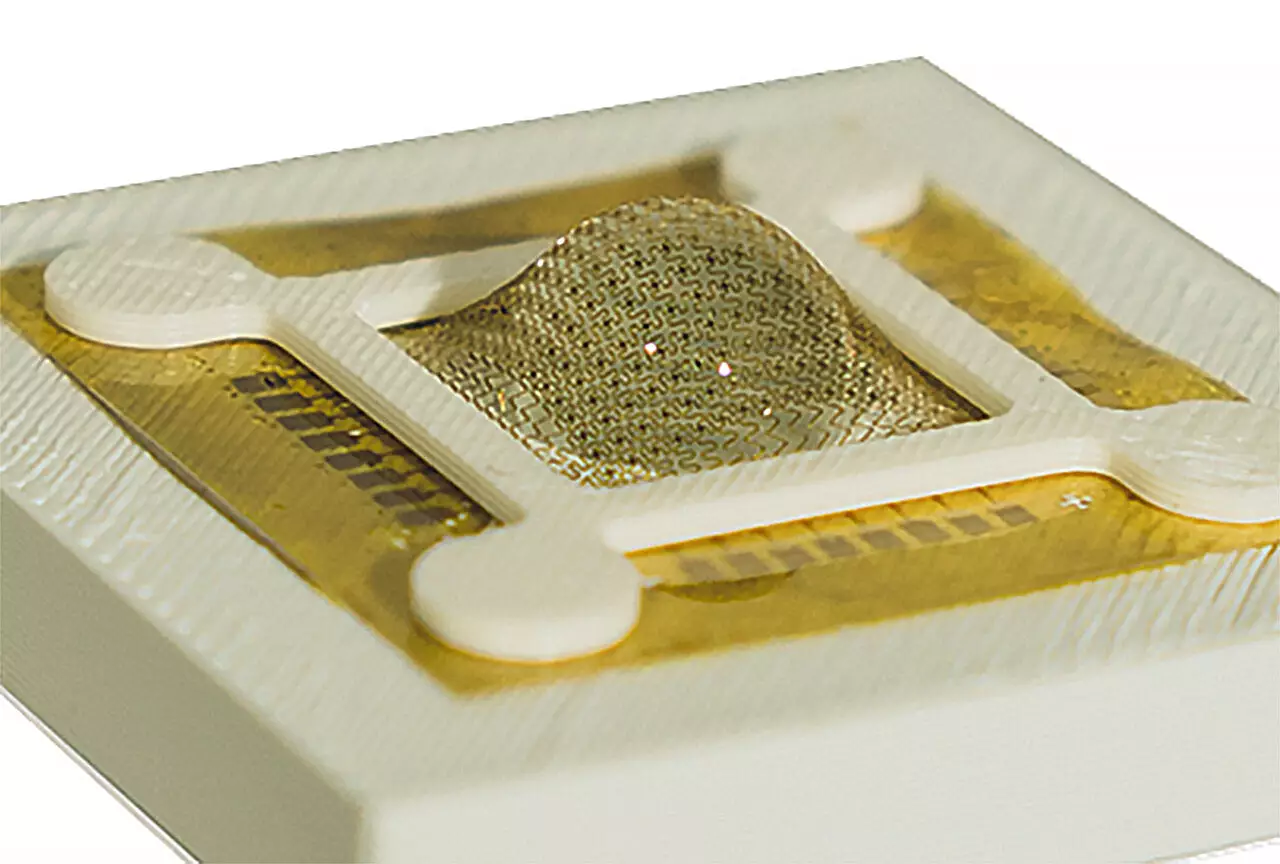

Inspired by the remarkable abilities of praying mantis eyes, researchers at the University of Virginia School of Engineering and Applied Science have developed artificial compound eyes that address the shortcomings of current visual systems. By combining microlenses and multiple photodiodes on a flexible semiconductor material, the team was able to create a sensor array that mimics the structure and functionality of mantis eyes. This innovative design allows for a wide field of view and superior depth perception, essential for applications that interact with dynamic surroundings.

One of the key advantages of the artificial compound eyes is their low power consumption compared to traditional visual systems. The system developed by the researchers can process visual information in real time, eliminating the need for cloud computing and significantly reducing energy usage. By continuously monitoring changes in the scene and encoding this information into smaller data sets for processing, the sensor array mirrors how insects perceive the world through visual cues. This approach not only improves spatial awareness but also enhances the efficiency and accuracy of 3D spatiotemporal perception.

The development of biomimetic artificial eyes represents a groundbreaking achievement in the field of visual data processing. By integrating advanced materials and algorithms, the research team has demonstrated a clever solution to complex challenges in visual processing. The seamless fusion of flexible semiconductor materials, conformal devices, in-sensor memory components, and post-processing algorithms has paved the way for real-time, efficient, and accurate 3D perception. This work not only has implications for self-driving vehicles but also has the potential to inspire other engineers and scientists in developing innovative solutions for a variety of applications.

The research conducted by the team at the University of Virginia School of Engineering and Applied Science has the potential to revolutionize the field of visual data processing. By drawing inspiration from the natural world, the development of biomimetic artificial eyes has overcome significant limitations in current visual systems. This innovative technology not only improves spatial awareness and depth perception but also offers a more energy-efficient and real-time solution for processing visual information. With further advancements in this area, we can expect to see a new era of visual data processing that is both efficient and highly accurate.

Leave a Reply