Machine learning has come a long way in recent years, with artificial neural networks being at the forefront of complex language and vision tasks. However, the process of training these systems can be slow and power-intensive. Scientists are constantly faced with tradeoffs in building and scaling up brain-like systems for machine learning applications. The challenge lies in finding a system that is not only fast and low-power but also scalable and capable of handling more complex tasks.

The Emergence of Self-Learning Analog Systems

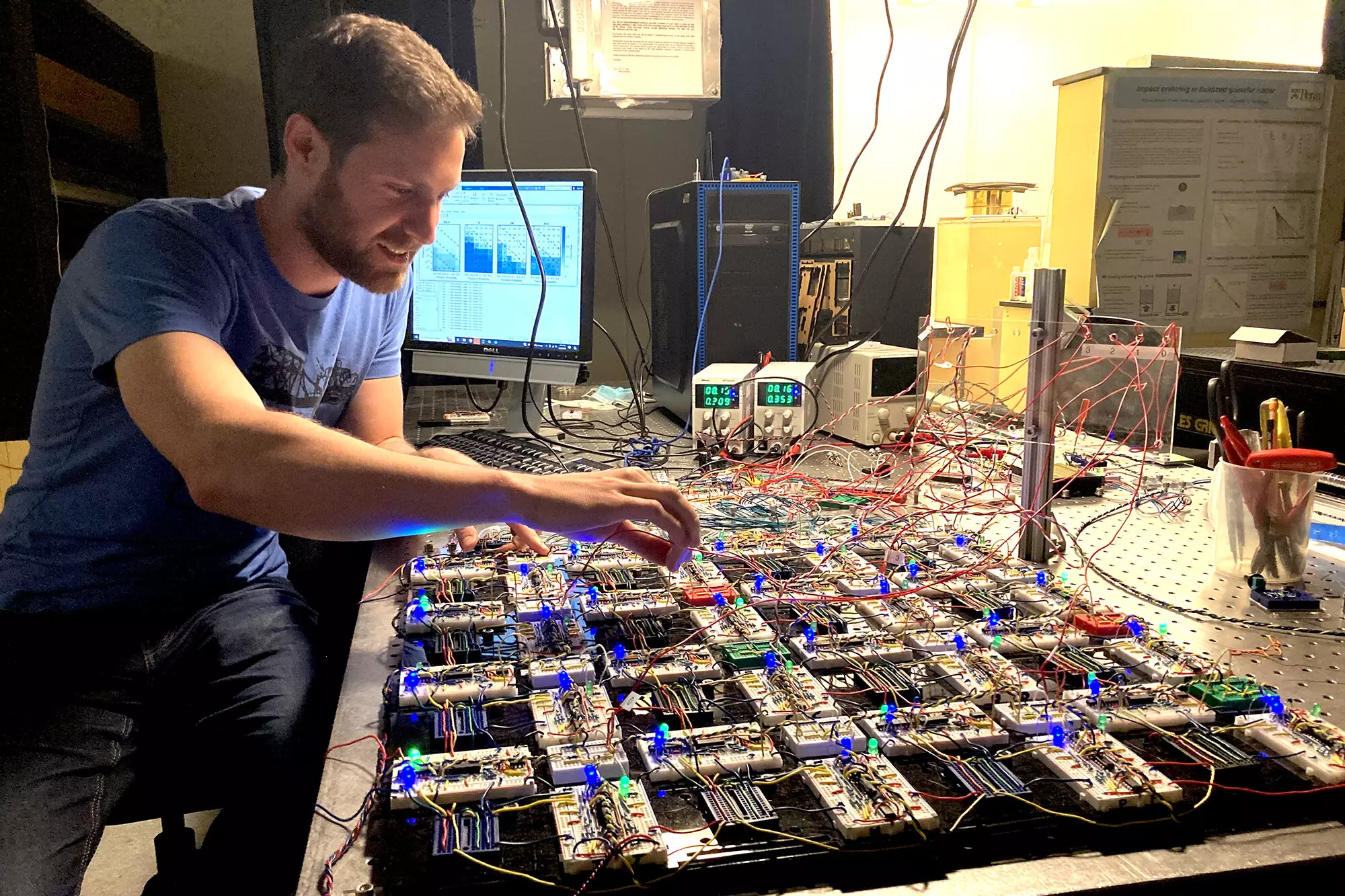

Physics and engineering researchers at the University of Pennsylvania have developed a cutting-edge analog system that addresses many of the limitations of existing machine learning systems. This contrastive local learning network is unique in that it evolves on its own based on local rules without prior knowledge of the larger structure. The system is reminiscent of how neurons in the human brain operate independently but work collectively to enable learning.

Unlike traditional systems that are limited to linear tasks, this self-learning analog system can handle more complicated tasks, including “exclusive or” relationships and nonlinear regression. This breakthrough opens up a myriad of possibilities for scaling up machine learning systems without the constraints of error accumulation.

Implications for Future Research

The self-learning analog system provides a unique opportunity for studying emergent learning phenomena. By simplifying the dynamics and using local learning rules, researchers can gain valuable insights that can be applied to various fields, including biology. The system’s precise trainability and use of simple modular components make it an ideal model for exploring complex problems.

The implications of this research extend beyond theoretical frameworks, with practical applications in interfacing with devices that require data processing. From cameras to microphones, the self-learning analog system has the potential to revolutionize how we interact with technology. Researchers are already exploring ways to scale up the design and address critical questions surrounding memory storage, noise effects, network architecture, and nonlinearities.

The development of self-learning analog systems represents a significant leap forward in the field of machine learning. By harnessing the power of local learning rules and analog processing, researchers have created a system that is not only fast and low-power but also highly adaptable and scalable. As we continue to unravel the mysteries of emergent learning, the possibilities for applying these insights are endless. This groundbreaking research lays the foundation for a new era of machine learning capabilities that could shape the future of technology and innovation.

Leave a Reply